Homelab Kubernetes cluster with Talos, Matchbox and Cilium

Intro

Some time ago, I deployed a Kubernetes cluster as a homelab for running some workload and testing some stuff. Back then, I used Unbutu server with straight kubeadm to bootstrap the nodes. While it was working, it was not really fun to maintain the OS layer.

Then I came accros Talos and started using that as the Linux distro on my nodes. My first experience with Talos was the good old : download the boot assets, write them to a USB key and go from there.

Recently, I decided to improve this setup and make the nodes deploy in an automated fashion via iPXE. This write up covers the steps to get there.

Talos: Talos Linux is a distribution dedicated to running Kubernetes. It can be deployed almost anywhere, which makes it an excellent choice for a homelab. By default, it contains only what is necessary to run Kubernetes. It doesn’t provide a terminal access, everything goes through an API. It’s also quite straightforward to manage and upgrade.

Matchbox: Although you could simply use a USB stick and

talosctlto configure Talos nodes, I decided to deploy and bootstrap my nodes via iPXE. Matchbox will help in that regards by using the correct confugration file (control plane vs. worker node) depending on the MAC address.Cilium: Cilium is my cluster CNI. Aside from the networking capabilities, it can also provide LB IPAM capabilities instead of deploying an additional tool like MetalLB.

Sources

A lot of what is detailed below has been sourced from different places :

Preparation

Note : I started writing this months ago, I updated some stuff but some of the versions numbers might not match the current releases. Just don’t blindly copy paste things ;)

Matchbox networking pre-quisite

As I’m using a Raspberry PI with Pi-hole as my DHCP server, I will skip dnsmasq general installation and configuration and just focus on what’s needed to make it uses Matchbox as the PXE service.

Create an additionnal config file in /etc/dnsmasq.d folder…

1vim /etc/dnsmasq.d/07-tftp-server.conf…and add the following.

enable-tftp

tftp-root=/var/lib/tftpboot

dhcp-userclass=set:ipxe,iPXE

pxe-service=tag:#ipxe,x86PC,"PXE chainload to iPXE",undionly.kpxe

pxe-service=tag:ipxe,x86PC,"iPXE",http://matchbox.lan:8080/boot.ipxe #Make sure the hostname point to the machine matchbox is deployed toYou will probably need to restart dnsmasq service.

Next add http://boot.ipxe.org/undionly.kpxe to the tftp-root. (in this case /var/lib/tftpboot)

Matchbox installation

Download the latest Matchbox release. As I’m running Matchbox on a RPI, I’m using the ARM64 release below. Make sure to use the one that matches your architecture.

1#Download latest release

2wget https://github.com/poseidon/matchbox/releases/download/v0.11.0/matchbox-v0.11.0-linux-arm64.tar.gz

3#Verify signature

4gpg --keyserver keyserver.ubuntu.com --recv-key 2E3D92BF07D9DDCCB3BAE4A48F515AD1602065C8

5gpg --verify matchbox-v0.11.0-linux-arm64.tar.gz.asc matchbox-v0.11.0-linux-arm64.tar.gz

6#Untar

7tar xzvf matchbox-v0.10.0-linux-arm64.tar.gzCopy the binary to an appropriate location in your $PATH`

1sudo cp matchbox /usr/local/binCreate a dedicated user for the matchbox service.

1sudo useradd -U matchbox

2sudo mkdir -p /var/lib/matchbox/assets

3sudo chown -R matchbox:matchbox /var/lib/matchboxCopy the provided Matchbox systemd unit file.

1sudo cp contrib/systemd/matchbox.service /etc/systemd/system/matchbox.serviceNow edit the systemd service…

1sudo systemctl edit matchbox…and enable the gRPC API. This is needed as later we will be using OpenTofu to configure the Matchbox profiles for the Talos nodes. If you don’t intend to use OpenTofu for this and configure the Matchbox profiles by other means, you can also leave it as is.

[Service]

Environment="MATCHBOX_ADDRESS=0.0.0.0:8080"

Environment="MATCHBOX_LOG_LEVEL=debug"

Environment="MATCHBOX_RPC_ADDRESS=0.0.0.0:8081"Eventually, start and enable the service.

1sudo systemctl daemon-reload

2sudo systemctl start matchbox

3sudo systemctl enable matchboxTalos configuration files

Now you need to generate the Talos configuration files for our machines.

192.168.1.50 is a Virtual IP that will be shared by the controlplane nodes. It will serve as the main API address for Kubernetes.

1talosctl gen config talos-k8s-metal-tutorial https://192.168.1.50:6443

2created controlplane.yaml

3created worker.yaml

4created talosconfigIn controlplane.yaml, add the VIP that will be shared between the controlplane nodes.

1...

2machine:

3 network:

4 # `interfaces` is used to define the network interface configuration.

5 interfaces:

6 - interface: eno1 # The interface name.

7 dhcp: true # Indicates if DHCP should be used to configure the interface.

8 # Virtual (shared) IP address configuration.

9 vip:

10 ip: 192.168.1.50 # Specifies the IP address to be used.

11...As I am using Longhorn to provide local block storage to my workloads, I will aso add the following to both workload.yaml and controlplane.yaml files. If you don’t need Longhorn, you can skip this step.

1...

2machine:

3 kubelet:

4 extraMounts:

5 - destination: /var/lib/longhorn # Destination is the absolute path where the mount will be placed in the container.

6 type: bind # Type specifies the mount kind.

7 source: /var/lib/longhorn # Source specifies the source path of the mount.

8 # Options are fstab style mount options.

9 options:

10 - bind

11 - rshared

12 - rw

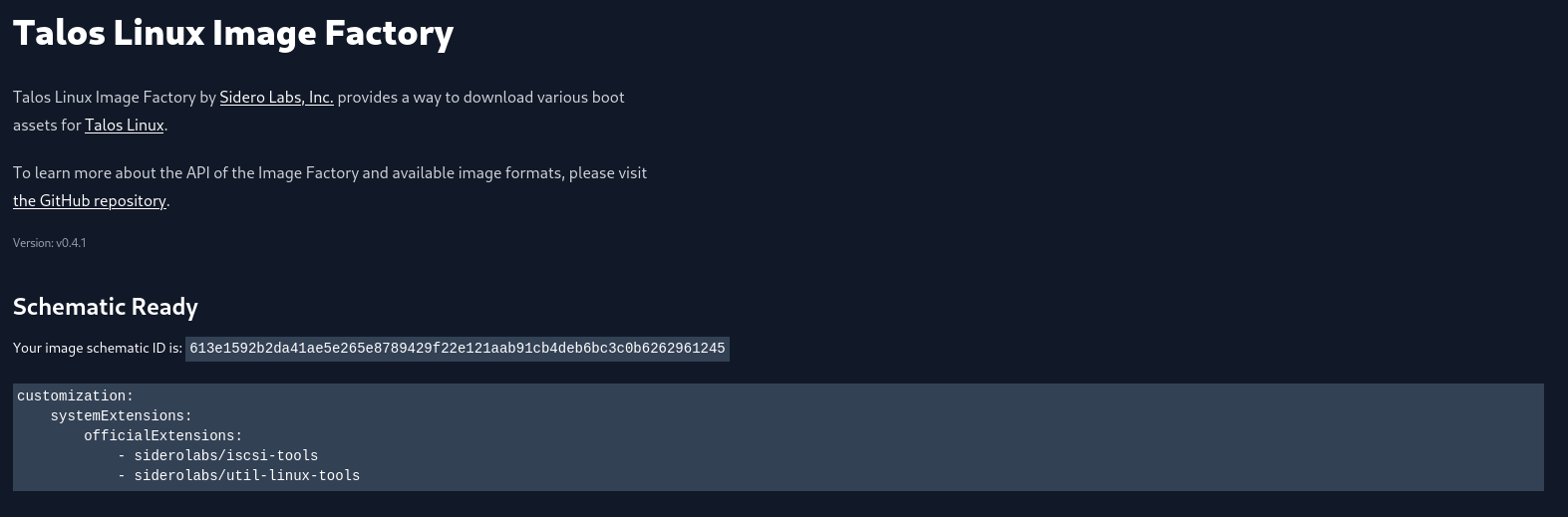

13...I will also change the default installer, as Longhorn requires some specific system extensions to function. For this, I use Talos Linux Image Factory to generate a schematic ID.

The schematic ID is then used to replace the default installer in both workload.yaml and controlplane.yaml files.

Note that depending on your hardware configuration, you may also need to change the diskSelector. This will pick any device that is plugged in and has 500GB or more. You can also directly specify the device.

1machine:

2 install:

3 diskSelector:

4 size: '>= 500GB' # Disk size.

5 image: factory.talos.dev/installer/613e1592b2da41ae5e265e8789429f22e121aab91cb4deb6bc3c0b6262961245:v1.8.2Last thing, I’m going to disable kube-proxy as I will be replacing it with Cilium. This is only configured in the controlplane.yaml file.

1cluster:

2 proxy:

3 disabled: true # Disable kube-proxy deployment on cluster bootstrap.Using talosctl, you can verify that the configuration files are still valid.

1talosctl validate --config controlplane.yaml --mode metal

2 controlplane.yaml is valid for metal mode

3talosctl validate --config worker.yaml --mode metal

4 worker.yaml is valid for metal modeThe last step is to copy both workload.yaml and controlplane.yaml files to the assets folder on the Matchbox host.

1tree /var/lib/matchbox/

2/var/lib/matchbox/

3|-- assets

4| `-- talos

5| |-- controlplane.yaml

6| `-- worker.yamlGenerate Matchbox TLS certificate

Because I’m using OpenTofu to configure the Matchbox profiles, I need to generate TLS certificates to authenticate with Matchbox gRPC API.

1export SAN=DNS.1:matchbox.lan,IP.1:192.168.1.33 #make sure this match your environmentRun the provided script to generate the TLS certificates.

1cd scripts/tls

2./cert-genThen move the server TLS files to the Matchbox server’s default location.

1sudo mkdir -p /etc/matchbox

2sudo cp ca.crt server.crt server.key /etc/matchbox

3sudo chown -R matchbox:matchbox /etc/matchboxMake sure to save the client TLS file for later (client.crt, client.key and ca.crt). They will be required to configure Matchbox with OpenTofu.

Create Matchbox profiles

I will be using OpenTofu to create the matchbox profiles using the Matchbox provider and the Talos provider to get the correct schematic ID for the boot assets. The complete code is available in this repo.

Start by configuring the provider and copy the client TLS file that you saved earlier to a certs folder alongside the configuration files.

1terraform {

2 required_providers {

3 matchbox = {

4 source = "poseidon/matchbox"

5 version = "0.5.4"

6 }

7 talos = {

8 source = "siderolabs/talos"

9 version = "0.7.0-alpha.0"

10 }

11 }

12}

13

14provider "matchbox" {

15 endpoint = "matchbox.lan:8081" # make sure this match your environment

16 client_cert = file("certs/client.crt")

17 client_key = file("certs/client.key")

18 ca = file("certs/ca.crt")

19}Also add the configuration for the Talos image factory. This will give us the schematic ID for our boot assets.

1data "talos_image_factory_extensions_versions" "image_factory" {

2 # get the latest talos version

3 talos_version = var.talos_version

4 filters = {

5 names = var.talos_extensions

6 }

7}

8

9resource "talos_image_factory_schematic" "image_factory" {

10 schematic = yamlencode(

11 {

12 customization = {

13 systemExtensions = {

14 officialExtensions = data.talos_image_factory_extensions_versions.image_factory.extensions_info.*.name

15 }

16 }

17 }

18 )

19}Create two locals for the boot assets. The cool trick here is to directly point to the Talos image factory. There is no need to save these on the matchbox host.

1locals {

2 kernel = "https://pxe.factory.talos.dev/image/${talos_image_factory_schematic.image_factory.id}/${var.talos_version}/kernel-amd64"

3 initrd = "https://pxe.factory.talos.dev/image/${talos_image_factory_schematic.image_factory.id}/${var.talos_version}/initramfs-amd64.xz"

4}Add the matchbox_group and matchbox_profile resource configuration for the controlplane nodes.

The talos.config parameter should matches with the location of the controlplane.yaml file on the Matchbox host. It’s this parameter that tells Talos were to find the configuration file for the node.

1resource "matchbox_profile" "controlplane" {

2 name = "controlplane"

3 kernel = local.kernel

4 initrd = [local.initrd]

5 args = [

6 "initrd=initramfs.xz",

7 "init_on_alloc=1",

8 "slab_nomerge",

9 "pti=on",

10 "console=tty0",

11 "console=ttyS0",

12 "printk.devkmsg=on",

13 "talos.platform=metal",

14 "talos.config=http://matchbox.lan:8080/assets/talos/controlplane.yaml"

15 ]

16}

17

18resource "matchbox_group" "controlplane" {

19 for_each = var.controlplanes

20 name = each.key

21 profile = matchbox_profile.controlplane.name

22 selector = {

23 mac = each.value

24 }

25}Then simply repeat the same for the worker nodes.

1resource "matchbox_profile" "worker" {

2 name = "worker"

3 kernel = local.kernel

4 initrd = [local.initrd]

5 args = [

6 "initrd=initramfs.xz",

7 "init_on_alloc=1",

8 "slab_nomerge",

9 "pti=on",

10 "console=tty0",

11 "console=ttyS0",

12 "printk.devkmsg=on",

13 "talos.platform=metal",

14 "talos.config=http://matchbox.lan:8080/assets/talos/worker.yaml"

15 ]

16}

17

18resource "matchbox_group" "worker" {

19 for_each = var.workers

20 name = each.key

21 profile = matchbox_profile.worker.name

22 selector = {

23 mac = each.value

24 }

25}Your *.tfvars file should look something like this with the mac address for each node. This is how Matchbox will determine which profile to use.

1talos_version = "v1.8.2"

2talos_extensions = ["iscsi-tools", "util-linux-tools"]

3controlplanes = {

4 controlplane-node01 = "00:00:00:00:00:00"

5 controlplane-node02 = "00:00:00:00:00:00"

6 controlplane-node03 = "00:00:00:00:00:00"

7}

8workers = {

9 worker-node01 = "00:00:00:00:00:00"

10}You can now apply the configuration.

1tofu plan

2tofu applyIf all went well, the groups and profiles will be created on the Matchbox host.

1tree /var/lib/matchbox/

2/var/lib/matchbox/

3|-- assets

4| `-- talos

5| |-- controlplane.yaml

6| `-- worker.yaml

7|-- groups

8| |-- controlplane-node01.json

9| |-- controlplane-node02.json

10| |-- controlplane-node03.json

11| `-- worker-node01.json

12`-- profiles

13 |-- controlplane.json

14 `-- worker.jsonBootstrap the nodes

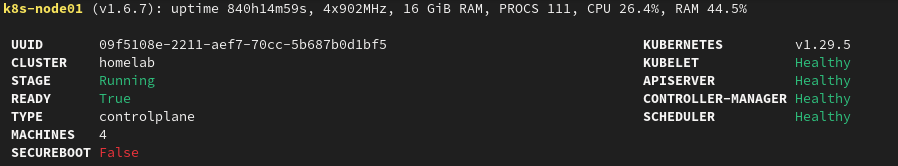

This is it! You can now boot your first nodes. If all goes well, it should look similar to this :

Now you can bootstrap your cluster. You only need to run this command once against one of the controlplane node :

1talosctl bootstrap -n 192.168.1.20At this point, the other nodes should automatically join the cluster if they are already started.

Once the bootstrapping of all nodes is complete, most of the stuff at the top should come up green. The kubeconfig should automatically be copied to ~/.kube on our system, but it’s also possible to retrieve it talosctl kubeconfig -n 192.168.1.20.

If you disabled kube-proxy earlier, you will notice with kubectl get nodes that the nodes are posting a NotReady state. We still need to deploy Cilium.

Cilium

Install Cilium

The recommended way to install Cilium is with Helm.

1helm repo add cilium https://helm.cilium.io/

2helm repo updateMostly, you can re-use the recommended options from the Talos documentation.

Just add l2announcements.enabled=true as Cilium L2 announcement feature is required in conjunction with LB IPAM feature. Those 2 options will allow Cilium to automatically assign IP addresses to LoadBalancer type services.

1helm install \

2 cilium cilium/cilium \

3 --version 1.16.3 \

4 --namespace kube-system \

5 --set ipam.mode=kubernetes \

6 --set=kubeProxyReplacement=true \

7 --set=securityContext.capabilities.ciliumAgent="{CHOWN,KILL,NET_ADMIN,NET_RAW,IPC_LOCK,SYS_ADMIN,SYS_RESOURCE,DAC_OVERRIDE,FOWNER,SETGID,SETUID}" \

8 --set=securityContext.capabilities.cleanCiliumState="{NET_ADMIN,SYS_ADMIN,SYS_RESOURCE}" \

9 --set=cgroup.autoMount.enabled=false \

10 --set=cgroup.hostRoot=/sys/fs/cgroup \

11 --set=k8sServiceHost=localhost \

12 --set=k8sServicePort=7445 \

13 --set=l2announcements.enabled=trueWith cilium-cli, we can easily check the deployment status.

1 /¯¯\

2 /¯¯\__/¯¯\ Cilium: OK

3 \__/¯¯\__/ Operator: OK

4 /¯¯\__/¯¯\ Envoy DaemonSet: disabled (using embedded mode)

5 \__/¯¯\__/ Hubble Relay: disabled

6 \__/ ClusterMesh: disabled

7

8Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

9DaemonSet cilium Desired: 4, Ready: 4/4, Available: 4/4

10Containers: cilium Running: 4

11 cilium-operator Running: 2

12Cluster Pods: 75/75 managed by Cilium

13Helm chart version:

14Image versions cilium quay.io/cilium/cilium:v1.15.5@sha256:4ce1666a73815101ec9a4d360af6c5b7f1193ab00d89b7124f8505dee147ca40: 4

15 cilium-operator quay.io/cilium/operator-generic:v1.15.5@sha256:f5d3d19754074ca052be6aac5d1ffb1de1eb5f2d947222b5f10f6d97ad4383e8: 2Once everything is green, the Kubernetes nodes should also post a Ready state.

Enable L2 announcement and LB IPAM features

This is quite straight forward.

Define an IP address pool :

1apiVersion: "cilium.io/v2alpha1"

2kind: CiliumLoadBalancerIPPool

3metadata:

4 name: "my-pool"

5spec:

6 cidrs:

7 - start: "192.168.1.60"

8 stop: "192.168.1.99"Create a L2 announcement policy :

1apiVersion: cilium.io/v2alpha1

2kind: CiliumL2AnnouncementPolicy

3metadata:

4 name: default-l2-announcement-policy

5 namespace: kube-system

6spec:

7 externalIPs: true

8 loadBalancerIPs: trueNow, whenever you create a LoadBalancer service type, it should automatically get an IP address assigned from the defined address pool and the service will be reachable from outside the cluster.

Conclusion

I see various way this setup could be improved, but in general, I think it is a nice starting point for K8s homelab cluster with Talos. I hope this was helpful to someone out there :)